Python3

- With python3, collect the information of price and image

- BeautifulSoup and selenium is used for this purpose

- let me show you how it works in actual yahoo jp auction

* Here is the highlight of YouTube tutorial.

Python can be used for web scraping and crawler for many purposes. One

of the useful way is for auction market analyzer. While you sleep or out

of office, your robot can works for you.

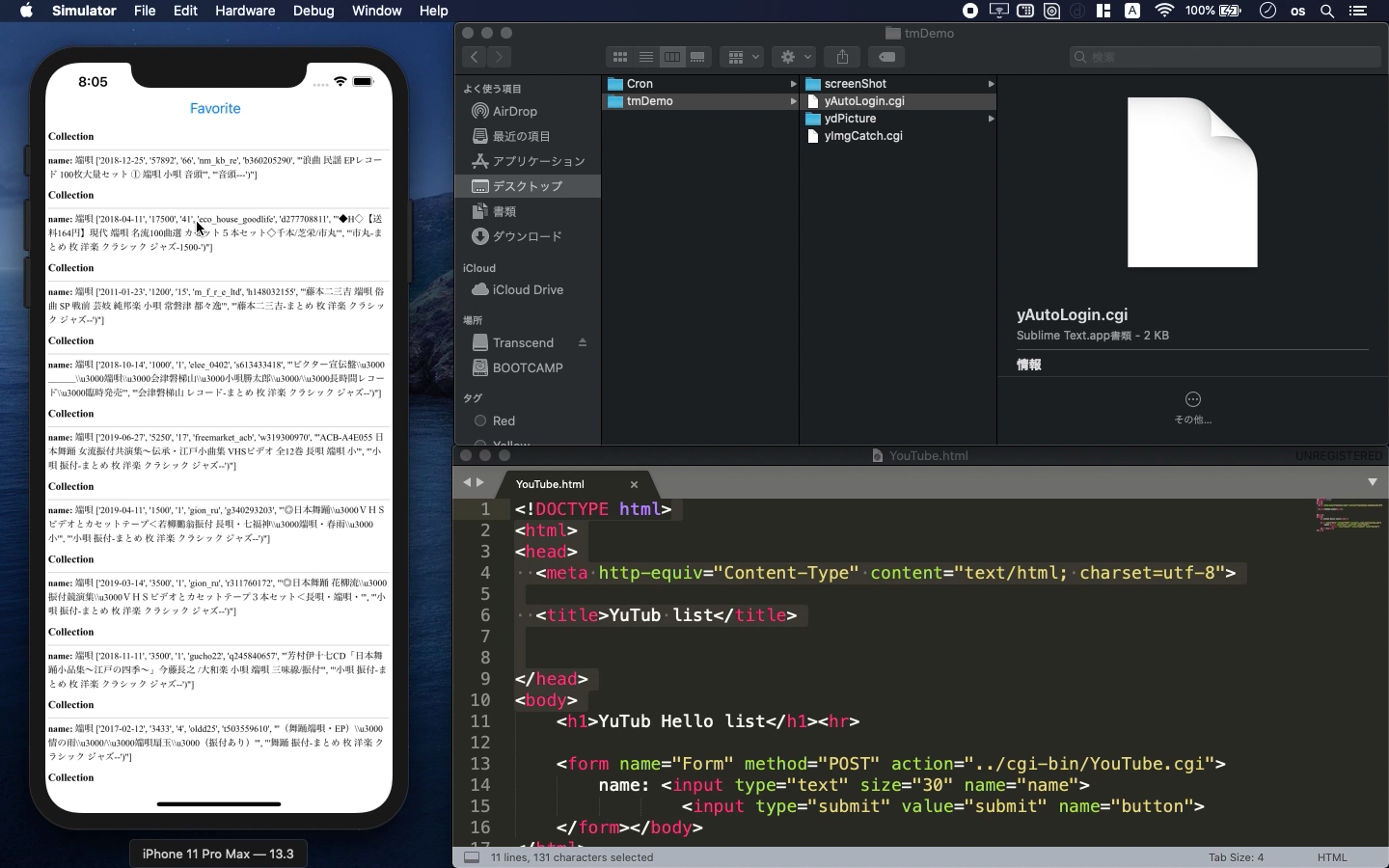

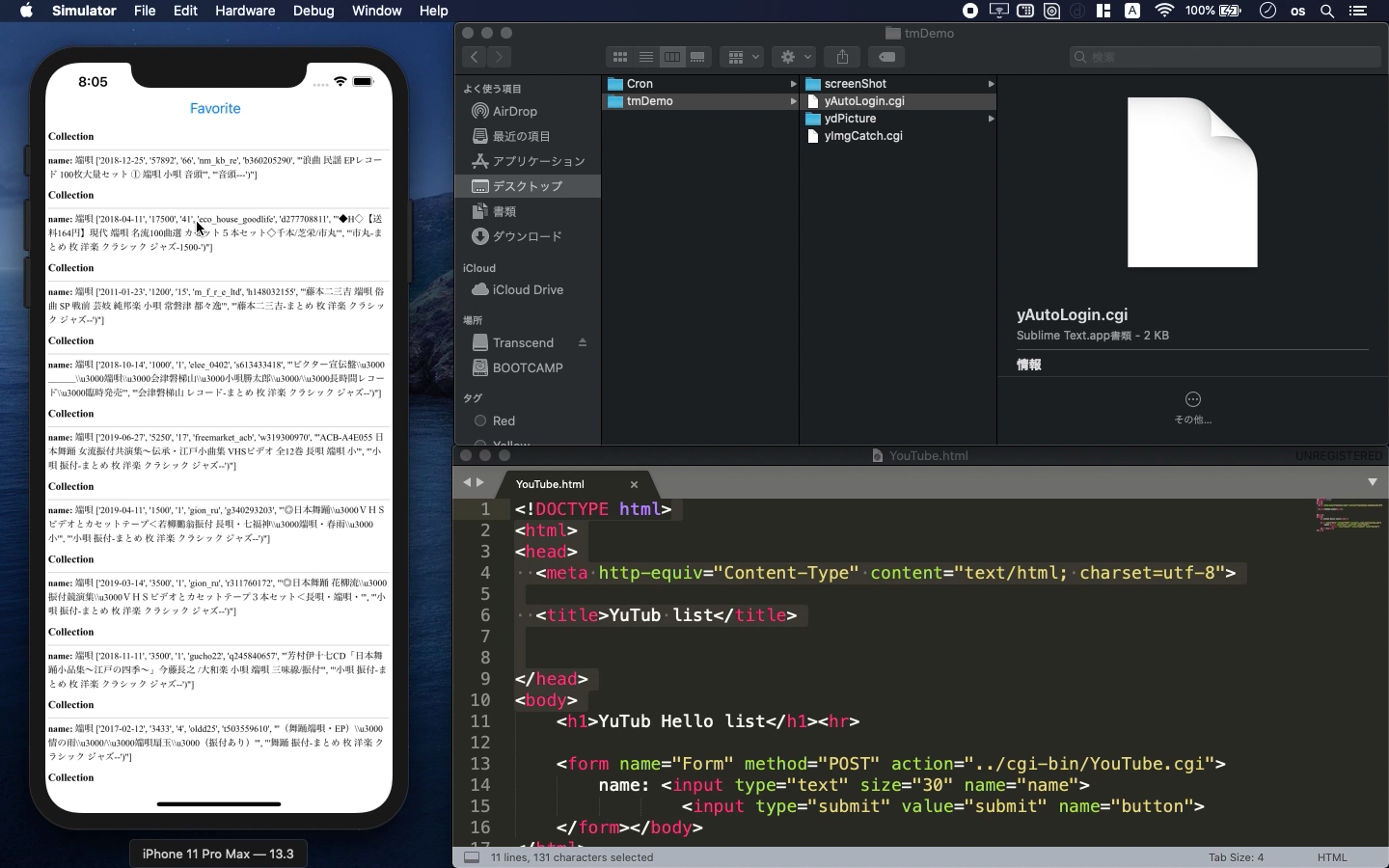

I often use it from my iPhone for my hobby. If you create this kind of

html on your server, you can post some keyword to analyze it.

<form name="Form" method="POST" action="../cgi-bin/YouTube.cgi">

name: <input type="text" size="30" name="name">

<input type="submit" value="submit" name="button">

</form></body>

If you set some timer such as crontab, you can also use my code for automatic

analyzer. Now, let me show you what kind of libraries that I used.

import logging

import time

from bs4 import BeautifulSoup

import requests

from selenium import webdriver

from selenium.webdriver.common.keys import Keys

import os.path

_dir = os.path.dirname(os.path.abspath(__file__))

This can be also used from linux server. But, in this time, let me show

you how it works on mac PC. Before you do this, you need to install chrome

driver with brew command.

try:

driver = webdriver.Chrome()

except Exception as e:

logging.error(e)

return None

driver.get(target_url)

bs4 = BeautifulSoup(driver.page_source, "html.parser")

login_url = "https://login.yahoo.co.jp/config/login?.src=www&.done=https://auctions.yahoo.co.jp/openwatchlist/jp/show/mystatus?select=watchlist&watchclosed=0"

driver.get(login_url)

time.sleep(1)

Here is the basic step to login this yahoo site.

driver.find_element_by_name("login").send_keys(login_id)

driver.find_element_by_name("btnNext").click()

time.sleep(1)

driver.find_element_by_name("passwd").send_keys(password)

driver.find_element_by_name("btnSubmit").click()

time.sleep(1)

driver.execute_script("window.scrollTo(0, 500);")

You need to input your login ID and password with the designated variables.

Your robot will type them very quickly so you need to prepare the sleep

command. You can also make screenshot by the following command.

driver.save_screenshot('testY.png')

In this way, you can get the screen image named "testY.png".

After you finish your work, please don't forget the following command so

that you can save your memory on your PC.

driver.close()

driver.quit()

You can also download some images from yahoo jp auction with these libraries.

import requests

from bs4 import BeautifulSoup

from six.moves.urllib import request

import sys

args = sys.argv

import re

import os

import pandas as pd

This may be a little bit difficult but let me show you how it works on

YouTube seminar. Please see it for the detail information.

def dl_y_images():

images = []

soup = BeautifulSoup(html,"html.parser")

for link in soup.find_all("img"):

if str(link).find('https://auctions.c.yimg.jp')>0:

print(link)

src = link.get("src")

if ('jpg' in src or 'gif' in src or 'png' in src):

images.append(src)

print(src)

couCou = 1

for image in images:

if image.find("https")>-1:

re = requests.get(image)

else:

re = requests.get("https:" + image)

print('Downloading:',image)

pName = dPicName + str(couCou) + ".jpg"

with open(pName,'wb') as f:

f.write(re.content)

couCou = couCou + 1

Let me add one advice. If you need the image file, you need to understand

what feature the link is. Yahoo jp auction uses the following url for their

images so this is the key to collect the image.

https://auctions.c.yimg.jp

To get the source code, check the comment of my YouTube

Back to Table List